Jan 15, 2018

Don’t Call AI “Magic”

M.C. Elish

Artificial intelligence is being increasingly used across multiple sectors and people often refer to its function as “magic.” In this blogpost, D&S researcher Madeleine Clare Elish points out how there’s nothing magical about AI and reminds us that the human labor involved in making AI systems work is often rendered invisible.

Read on Points ↗

Mar 20, 2016

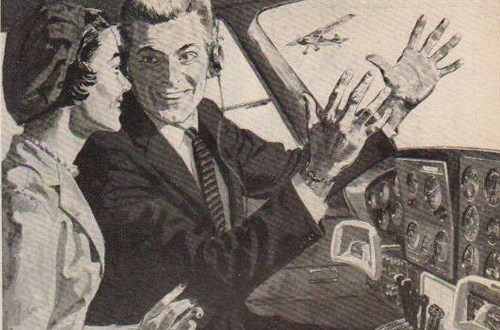

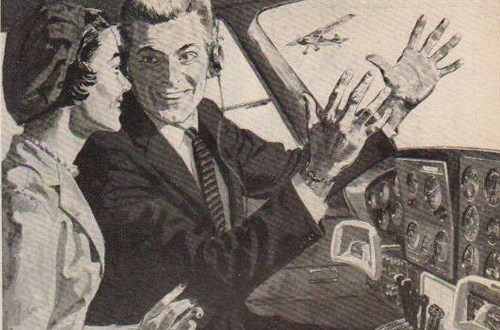

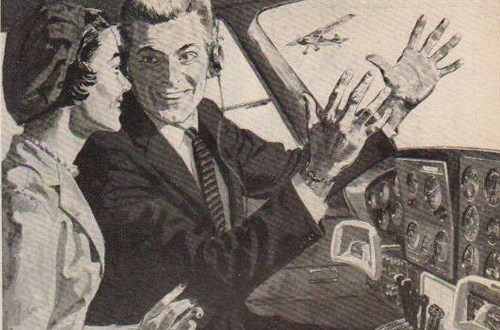

Moral Crumple Zones: Cautionary Tales in Human-Robot Interaction (We Robot 2016)

M.C. Elish

As control has become distributed across multiple actors, our social and legal conceptions of responsibility remain generally about an individual. If there’s an accident, we intuitively — and our laws, in practice — want someone to take the blame. The result of this ambiguity is that humans may emerge as “moral crumple zones.” Just as the crumple zone in a car is designed to absorb the force of impact in a crash, the human in a robotic system may become simply a component — accidentally or intentionally — that is intended to bear the brunt of the moral and legal penalties when the overall system fails.

Download from SSRN ↗

Mar 20, 2016

Moral Crumple Zones: Cautionary Tales in Human-Robot Interaction (We Robot 2016)

M.C. Elish

As control has become distributed across multiple actors, our social and legal conceptions of responsibility remain generally about an individual. If there’s an accident, we intuitively — and our laws, in practice — want someone to take the blame. The result of this ambiguity is that humans may emerge as “moral crumple zones.” Just as the crumple zone in a car is designed to absorb the force of impact in a crash, the human in a robotic system may become simply a component — accidentally or intentionally — that is intended to bear the brunt of the moral and legal penalties when the overall system fails.

Download from SSRN ↗

Oct 12, 2016

Regional Diversity in Autonomy and Work: A Case Study from Uber and Lyft Drivers

Alex Rosenblat and Tim Hwang

Preliminary observations of rideshare drivers and their changing working conditions reveals the significant role of worker motivations and regional political environments on the social and economic outcomes of automation. Technology’s capacity for social change is always combined with non-technological structures of power—legislation, economics, and cultural norms.

Download pdf ↗

Oct 6, 2016

An AI Pattern Language

M.C. Elish, Tim Hwang

How are practitioners grappling with the social impacts of AI systems? An AI Pattern Language presents a taxonomy of social challenges that emerged from interviews with a range practitioners working in the intelligent systems and AI industry. The book describes these challenges and articulates an array of patterns that practitioners have developed in response.

More information ↗

Oct 6, 2016

An AI Pattern Language

M.C. Elish, Tim Hwang

How are practitioners grappling with the social impacts of AI systems? An AI Pattern Language presents a taxonomy of social challenges that emerged from interviews with a range practitioners working in the intelligent systems and AI industry. The book describes these challenges and articulates an array of patterns that practitioners have developed in response.

More information ↗

May 25, 2015

The Counselor

Robin Sloan

A dying man squares off against a machine of his own creation, a system that deploys a formidable arsenal of persuasive weaponry in an attempt to convince the patient to end his own life. The central conflict of the story points to an increasingly important debate in the design of systems that use machine intelligence. What are the types of persuasive methods we should permit designers of machines to use, and under what contexts are certain methods inappropriate?

Read at VICE Motherboard ↗